A new sub-series of For A Parallel World, to give some development pointers on how to make software make better use of parallel computing resources available with modern multicore systems. Hope some people can find it interesting!

While I was looking for a (yet unfound) scripting language that could allow me to easily write conversion scripts with FFmpeg that execute in parallel, I’ve decided to finally take a look to OpenMP, which is something I wanted to do for quite a while. Thanks to some Intel documentation I tried it out on the old benchmark I used to compare glibc, glib and libavcodec/FFmpeg for what concerned byte swapping.

The code of the byte swapping routine was adapted to this (note the fact that the index is ssize_t, which means there is opening for breakage when len is bigger than SSIZE_T_MAX):

void test_bswap_file(const uint16_t *input, uint16_t *output, size_t len) {

ssize_t i;

#ifdef _OPENMP

#pragma omp parallel for

#endif

for (i = 0; i < len/2; i++) {

output[i] = GUINT16_FROM_BE(input[i]);

}

}

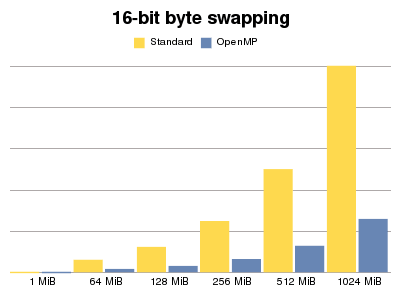

and I compared the execution time, through the @rdtsc@ instruction, between the standard code and the OpenMP-powered one, with GCC 4.3. The result actually surprised me. I expected I would have needed to make this much more complex, like breaking it into multiple chunks, to be able to make it fast. Instead it actually worked nice running one cycle on each:

The results are the average of 100 runs for each size, the file is on disk accessed through VFS, it’s not urandom; the values are not important since they are the output of rdtsc() so they only work for comparison.

Now of course this is a very lame way to use OpenMP, there are much better more complex ways to make use of it but all in all, I see quite a few interesting patterns arising. One very interesting note is that I can make use of this in quite a few of the audio output conversion functions in xine-lib, if I was able to resume dedicating time to work on that. Unfortunately lately I’ve been quite limited in time, especially since I don’t have a stable job.

But anyway, it’s interesting to know; if you’re a developer interested in making your code faster on modern multicore systems, consider taking some time to play with OpenMP. With some luck I’m going to write more about it in the future on this blog.

Crucial detail omitted: how manu cores/CPUs are there on that system? From the look of it, it’s at least 4 CPUs. But is that 4 (i.e. near linear scaling even with naive OpenMP code) or more?

Good call, I forgot about that “detail” even though I did gather the data about that.The system is my workstation, which is 8-way (dual quad-core), default threading settings for OpenMP (8 threads).I intend to work on some more details, like checking how OpenMP behaves changing the number of threads (both reducing and incrementing, even over the number of available cores), and trying a less lame code (splitting the area to swap in decently-sized arrays (so that cache is not thrown away too easily).Unfortunately tonight I didn’t have time to deal with that and I forgot about hinting about that data, sorry, huge mistake on my side.